Blog Details

| Blog Title: | Why is Reinforcement Learning such a hot topic at the moment? |

|---|---|

| Blogger: | sanket.lolge@gmail.com |

| Image: | View |

| Content: | “Throw a robot into a maze and let it find an exit“

Nowadays used for: 1. Self-driving cars

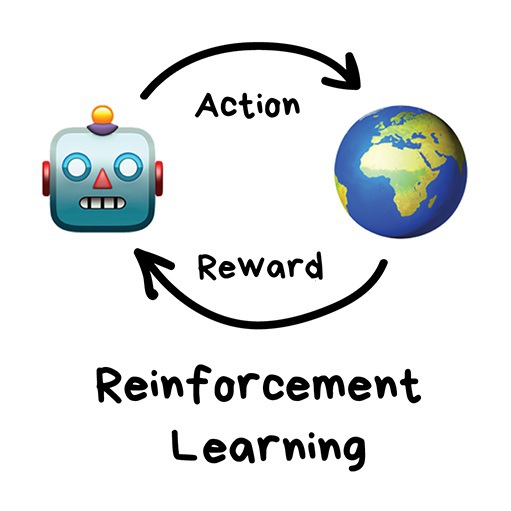

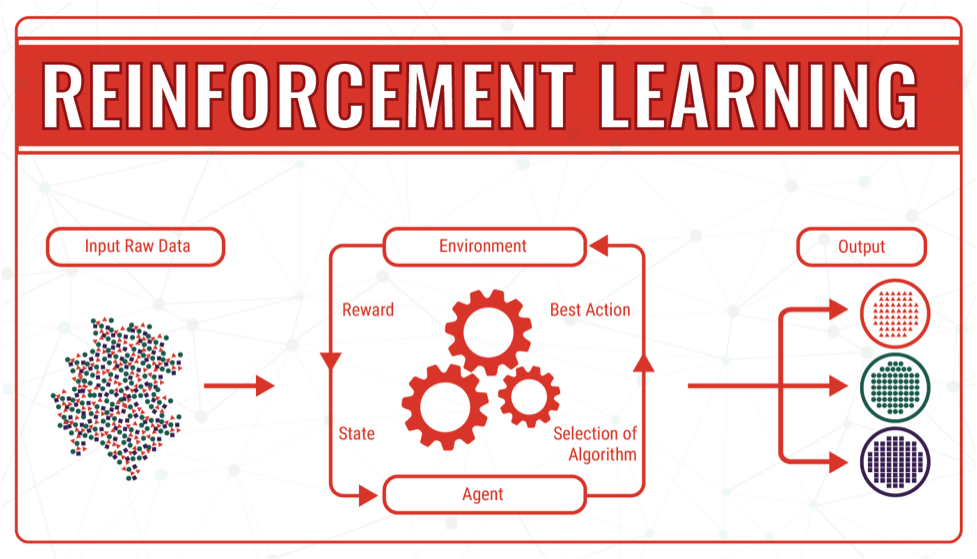

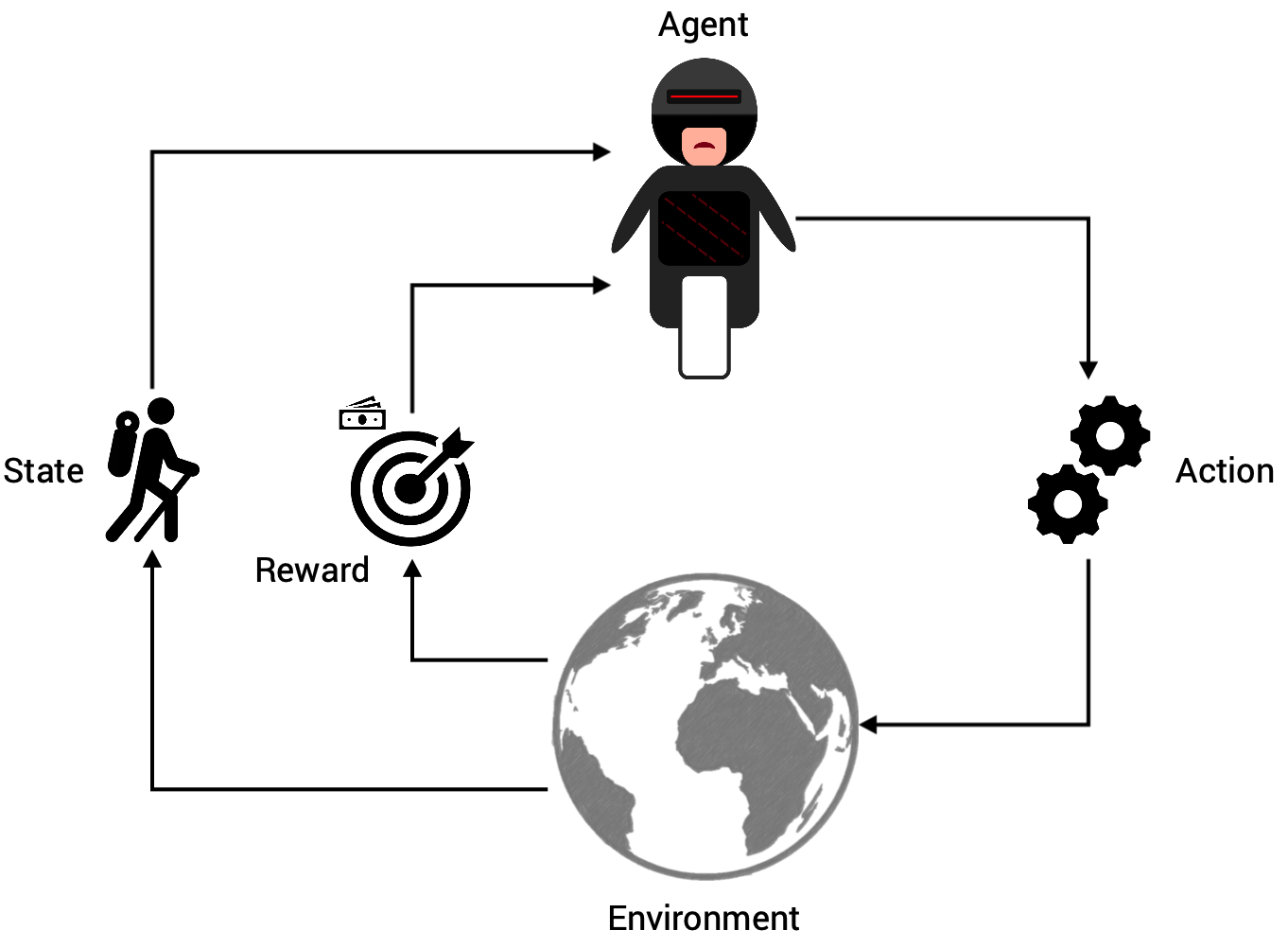

What it is?Reinforcement Learning is defined as a Machine Learning method that is concerned with how software agents should take actions in an environment. Reinforcement Learning is a part of the deep learning method that helps you to maximize some portion of the cumulative reward.

The idea behind Reinforcement Learning is that an agent will learn from the environment by interacting with it and receiving rewards for performing actions.

Learning from interaction with the environment comes from our natural experiences. Imagine you’re a child in a living room. You see a fireplace, and you approach it.

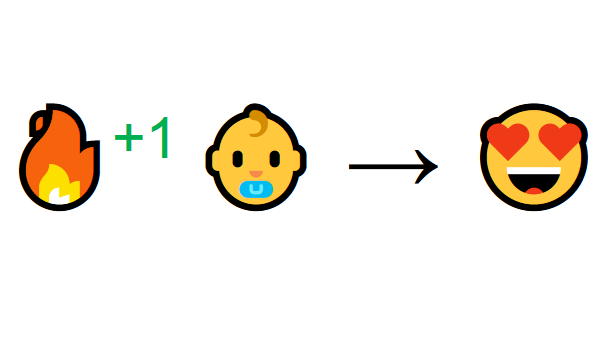

It’s warm, it’s positive, you feel good (Positive Reward +1). You understand that fire is a positive thing.

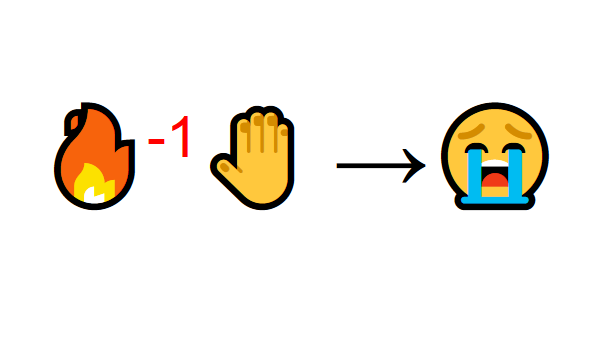

But then you try to touch the fire. Ouch! It burns your hand (Negative reward -1). You’ve just understood that fire is positive when you are a sufficient distance away, because it produces warmth. But get too close to it and you will be burned. That’s how humans learn, through interaction. Reinforcement Learning is just a computational approach of learning from action.

There are two different approaches-1. Model-Based: Model-Based means that car needs to memorize a map or its parts. That's a pretty outdated approach since it's impossible for the poor self-driving car to memorize the whole planet. 2. Model-Free: In Model-Free learning, the car doesn't memorize every movement but tries to generalize situations and act rationally while obtaining a maximum reward.

Let’s start with a question:Have you ever thought about how a living being learns something? I would like to present an example that reflects the learning behavior. If you imagine a child, you know that they want to explore everything and challenge their limits. If they try to learn to ride a bicycle (= they want to stay on it as long as possible), the child sits down on the bicycle and tries to pedal. Sooner or later they will fall off the bike … Now for the interesting part of this example: The child has performed an action in a certain environment, causing themselves to fall to the ground. On the basis of these experiences they learn that they can ride a bicycle longer by avoiding failure. A Reinforcement Learning agent also learns from these experiences. Each action is evaluated to see if it was good or bad and finally to find out which action is best suited to reach its goal. Below you can see the entire process in a diagram. An agent (e.g. the child or robot) is in a certain environment. Here it can perform actions, whereby each action moves the environment to a new state. By evaluating the performance of the transition from the old state to the new state, a reward will be granted.

So why is reinforcement learning such a hot topic at the moment?Google’s AlphaZero/AlphaGo has made huge headlines in recent years when it beat the world champion in Go and various other chess/shogi engines .

Terminology of Reinforcement LearningIn order to get a better understanding of the above example, I would first like to deal with the terminology. The point here is to get a feeling for what each term can be and what it should not be. If you want to dive deep into this amazing field, you have to get your basics right.

Agent

Actions

In continuous action spaces the agent has much more freedom, as it is no longer restricted to a finite set, e.g. specific movements, but can also vary the radius or length of movements at its own discretion. In both action spaces, however, it is important to make sure that the boundaries of the environment and the agent are respected. Environment

Reward

If, for example, you consider chess again, not every move can be evaluated per se, because in the end only winning counts. In this respect there is no general rule what a reward function has to look like or how it is defined, but it depends on the necessary criteria. Examples of criteria can be energy or time consumption until the goal is reached, or that the ultimate goal is reached at all. Looking at the video game Super Mario illustrates the point of a reward function. It maps states and actions to rewards using a simple set of rules:

1. When you reach the goal, you get a big reward. 2. For every coin you collect, you get a little reward. 3. The longer you need, the lower your reward will be. 4. If you fail, you get no reward at all.

Policy

Therefore, an optimal policy specifies what actions must be taken on the current state to achieve the highest reward. The biggest difficulty is learning an optimal policy where the agent has explored the environment sufficiently to perform appropriate actions even in unlikely states.

|

An agent is the protagonist in reinforcement learning. The agent must be able to perform actions in the environment and thus actively participate. It needs to be able to influence and maximize its reward. An agent is often associated with a robot or physical hardware, but the agent can also act in the background and give recommendations, eg. in the form of a recommender system. The state of an agent is determined by the observation within the environment and that state can take any form.

An agent is the protagonist in reinforcement learning. The agent must be able to perform actions in the environment and thus actively participate. It needs to be able to influence and maximize its reward. An agent is often associated with a robot or physical hardware, but the agent can also act in the background and give recommendations, eg. in the form of a recommender system. The state of an agent is determined by the observation within the environment and that state can take any form. Actions are performed by the agent and have an impact on the environment in which the agent is operating. The action space describes the set of actions an agent can choose from; it can be either discrete or continuous. In a discrete action space, the agent can only perform a limited set of actions—eg. he can only make one step forward, backward, left, or right per iteration.

Actions are performed by the agent and have an impact on the environment in which the agent is operating. The action space describes the set of actions an agent can choose from; it can be either discrete or continuous. In a discrete action space, the agent can only perform a limited set of actions—eg. he can only make one step forward, backward, left, or right per iteration. The world in which the agent performs the actions is called environment. Each action influences the environment to a higher or lesser extent and thus yields a state transition of the environment. There are usually rules that govern the environment’s dynamics, such as the laws of physics or the rules of a society, which in turn can determine a consequence of the actions. The entire current state of the environment is monitored by an interpreter that is able to decide whether the performed actions were good or bad.

The world in which the agent performs the actions is called environment. Each action influences the environment to a higher or lesser extent and thus yields a state transition of the environment. There are usually rules that govern the environment’s dynamics, such as the laws of physics or the rules of a society, which in turn can determine a consequence of the actions. The entire current state of the environment is monitored by an interpreter that is able to decide whether the performed actions were good or bad. The statements about how „good“ or „bad“ actions are defined by a reward function. There are different models and ways how this function can be constructed.

The statements about how „good“ or „bad“ actions are defined by a reward function. There are different models and ways how this function can be constructed. So what is the ultimate goal of Reinforcement Learning? It can be put as simply as this: Reinforcement Learning wants to find a strategy that has the best answer to the given circumstances.bThat’s what you call an optimal policy. The policy is in general a mapping from state to action.

So what is the ultimate goal of Reinforcement Learning? It can be put as simply as this: Reinforcement Learning wants to find a strategy that has the best answer to the given circumstances.bThat’s what you call an optimal policy. The policy is in general a mapping from state to action.